Intro

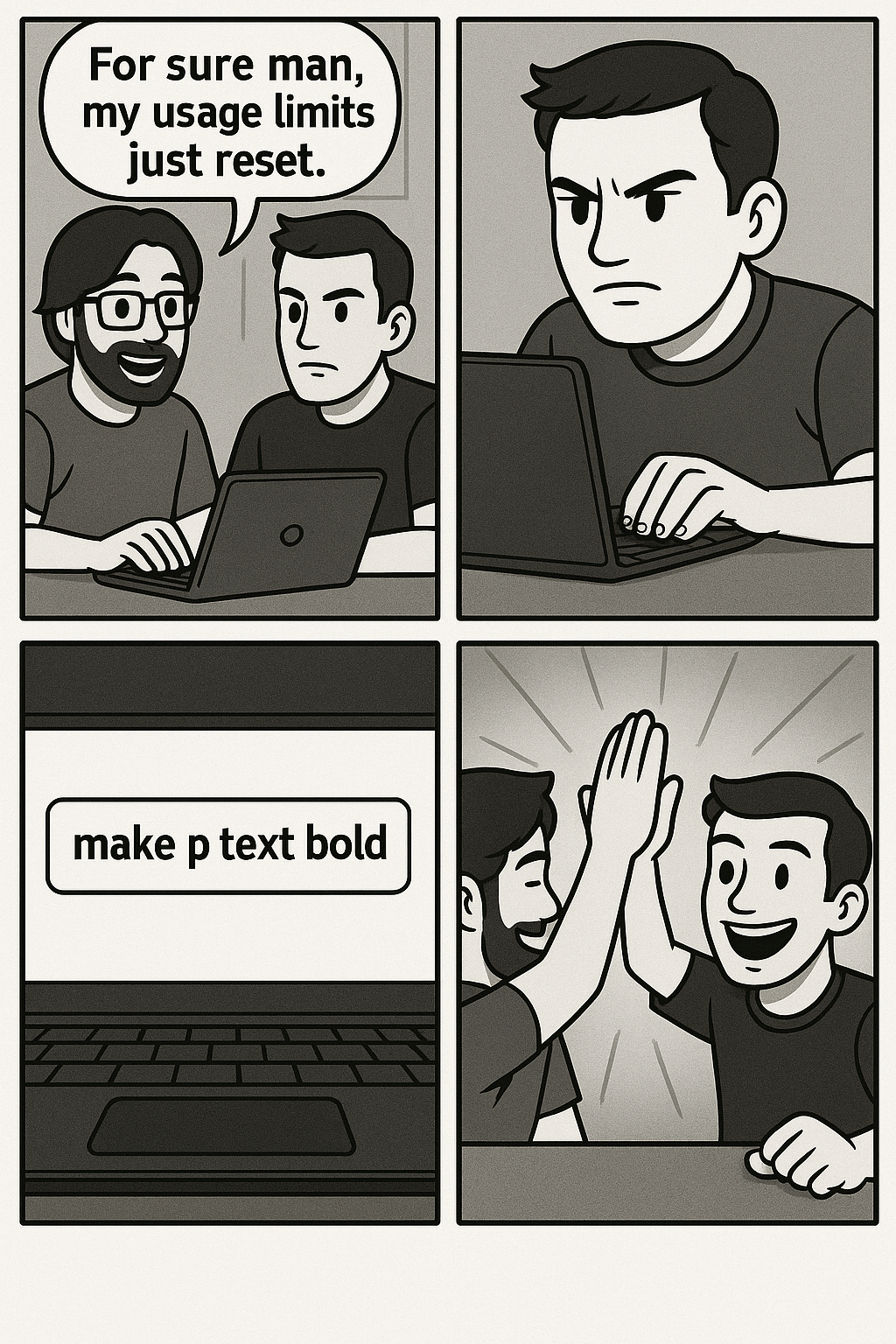

I'm starting this blog because I fear becoming one of the developers in the comic strip above (generated using AI, haha.) Now, the comic strip was my idea. I had to go through the cognitively intensive task of taking my inner world and my imagination and conveying that using language constraints to craft a series of prompts to get the desired output. But I didn't have to draw anything. I didn't have to learn a new online tool or learn to draw comic strips, or go through the strain of exporting the image. We live in an exciting age where we can use natural language to quickly implement solutions using AI Agents. However, I worry the convenience will atrophy our ability to think critically, and we'll be increasingly tempted to coast using AI as it gets better at predicting what we want. I'm starting a blog to hold myself to a higher standard. I want to be a developer who, yes, does offload a lot of the grunt work to AI Agents to construct complex SQL queries or perform the Math to create riveting visualizations and quickly handle several common cases. But I'd like to know about them. I'd like to really know about them, understand them in and out, and communicate them. So I can share these solutions so that they aren't locked away in a chat history or behind a looming usage limit. I want to grow alongside LLMS. The better I communicate and understand complex problems, the more I can extract from the LLMS and share these insights with other developers.

Serving My Time Before AI

I started writing software before the ubiquity of LLMS and AI Agents. I "served my time" writing code from scratch, scouring Stack Overflow, Reddit, and GitHub threads, and most importantly, working hard to fully understand a problem so that I could construct the most relevant Google Search query to find the solutions from other devs for it. It's truly awe-inspiring when you see the brilliance of more experienced devs, writing complex applications (usually within a team, but still) that make the world go round. No LLMS. It's like watching an artist reconstruct a scenic landscape. Nowadays, we can take a picture of a scenic landscape and have ChatGPT generate a picturesque version of it. But, do we further our understanding of the landscape? We don't even need to try, the LLM does it all for us. What if instead, we had the LLM generate the picturesque image, but then tried our own hand at recreating the landscape? Simiarly, it's amazing to see an AI Agent pump out a full-blown service, and yeah, we're careful to set parameters in the prompt and we specify the architecture before hand, and lay out the deliverables, but it's use it or lose it. Don't we want to understand how and why things are the done the way they are? Shouldn't we as developers strive to reduce the gap between ourselves and the LLMS? I can think of no better way to do so than to write.

Train your Writing Muscles

I'm having a difficult time writing this article. I never finished college, and it's been a long time since I've had any writing assignments. But writing isn't difficult because I haven't done it in a while; it's one of the most cognitively intensive things you can do. The good news is that, just like anything else, writing is a muscle that can be strengthened. This is my first article. My word choice, sentence structure, fluidity, and idea expression will improve the more I write. Taking your inner world, intuitions, and hunches and getting them down on paper - using the correct words in the proper places is difficult. Similarly, it's difficult to take real-world problems and turn them into code. But, by writing, you're exercising the fundamental muscle of taking ideas into your mind and describing them accurately and precisely. The better you can do this, the better your code will be. The fewer assumptions that need to be made, the less baggage your ideas will carry and the more portable they will be. I believe that since LLMS enables developers to create software using natural language, it's important that developers master it. Writing and verbalizing as much, if not more so, than code.

Bridging the Prompting Knowledge Gap

As a developer, I want to have a mastery of natural language. I want to be able to take the intuitions, hunches, and visions and get them on paper in a way that others can understand. It's not enough for me to spam generic prompts and brute force LLMS up until my usage limits expire. Now, I don't mind letting the LLM write a complex recursive CTE, implementing Manacher's Algorithm for longest palindrome substring problems, or even adding basic edge cases, but I should at least be able to understand them. I should be able to describe what and why the LLM did what it did. As I continue to reduce the gap in my understanding of the LLM, I'll be able to reduce wasted prompts, correct the LLM, and keep it from veering off course, but if it shows me something that I don't know, I'll be able to understand it, write about it and hold onto that knowledge myself and not have it locked behind an old chat or a usage limit.

Conclusion

This is my first, bad article. But, I'm not going to let okay be the enemy of good and publish this article. This is my first step transitioning from a software developer, someone who has familiarity and dexterity with the software development landscape to a software engineer, someone who can articulate, verbalize, and transmit complex problems.